PaddleClas serials model object used when to load a PaddleClas model exported by PaddleClas repository. More...

#include <model.h>

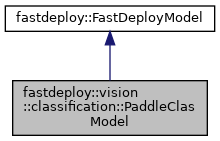

Inheritance diagram for fastdeploy::vision::classification::PaddleClasModel:

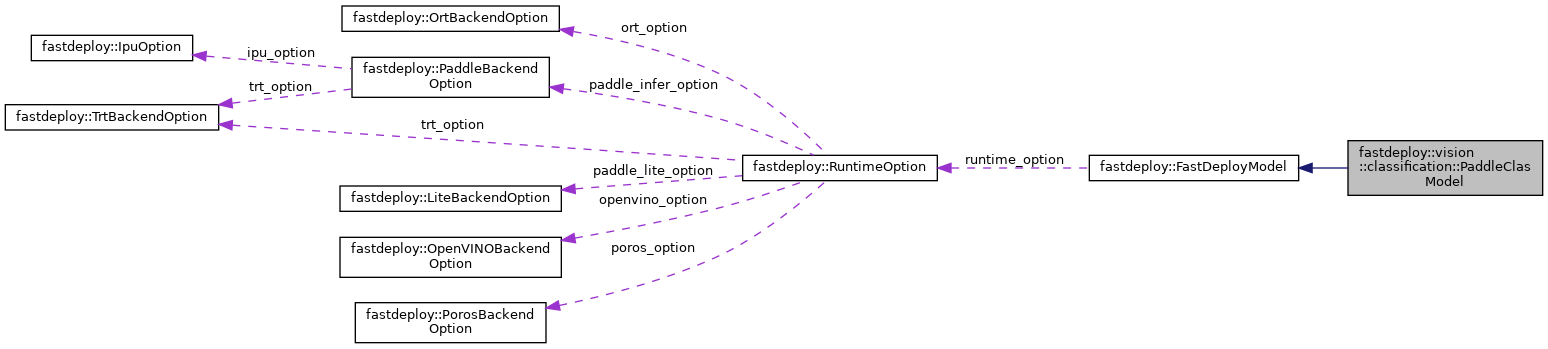

Collaboration diagram for fastdeploy::vision::classification::PaddleClasModel:

Public Member Functions | |

| PaddleClasModel (const std::string &model_file, const std::string ¶ms_file, const std::string &config_file, const RuntimeOption &custom_option=RuntimeOption(), const ModelFormat &model_format=ModelFormat::PADDLE) | |

| Set path of model file and configuration file, and the configuration of runtime. More... | |

| virtual std::unique_ptr< PaddleClasModel > | Clone () const |

| Clone a new PaddleClasModel with less memory usage when multiple instances of the same model are created. More... | |

| virtual std::string | ModelName () const |

| Get model's name. | |

| virtual bool | Predict (cv::Mat *im, ClassifyResult *result, int topk=1) |

| DEPRECATED Predict the classification result for an input image, remove at 1.0 version. More... | |

| virtual bool | Predict (const cv::Mat &img, ClassifyResult *result) |

| Predict the classification result for an input image. More... | |

| virtual bool | BatchPredict (const std::vector< cv::Mat > &imgs, std::vector< ClassifyResult > *results) |

| Predict the classification results for a batch of input images. More... | |

| virtual bool | Predict (const FDMat &mat, ClassifyResult *result) |

| Predict the classification result for an input image. More... | |

| virtual bool | BatchPredict (const std::vector< FDMat > &mats, std::vector< ClassifyResult > *results) |

| Predict the classification results for a batch of input images. More... | |

| virtual PaddleClasPreprocessor & | GetPreprocessor () |

| Get preprocessor reference of PaddleClasModel. | |

| virtual PaddleClasPostprocessor & | GetPostprocessor () |

| Get postprocessor reference of PaddleClasModel. | |

Public Member Functions inherited from fastdeploy::FastDeployModel Public Member Functions inherited from fastdeploy::FastDeployModel | |

| virtual bool | Infer (std::vector< FDTensor > &input_tensors, std::vector< FDTensor > *output_tensors) |

Inference the model by the runtime. This interface is included in the Predict() function, so we don't call Infer() directly in most common situation. | |

| virtual bool | Infer () |

| Inference the model by the runtime. This interface is using class member reused_input_tensors_ to do inference and writing results to reused_output_tensors_. | |

| virtual int | NumInputsOfRuntime () |

| Get number of inputs for this model. | |

| virtual int | NumOutputsOfRuntime () |

| Get number of outputs for this model. | |

| virtual TensorInfo | InputInfoOfRuntime (int index) |

| Get input information for this model. | |

| virtual TensorInfo | OutputInfoOfRuntime (int index) |

| Get output information for this model. | |

| virtual bool | Initialized () const |

| Check if the model is initialized successfully. | |

| virtual void | EnableRecordTimeOfRuntime () |

| This is a debug interface, used to record the time of runtime (backend + h2d + d2h) More... | |

| virtual void | DisableRecordTimeOfRuntime () |

Disable to record the time of runtime, see EnableRecordTimeOfRuntime() for more detail. | |

| virtual std::map< std::string, float > | PrintStatisInfoOfRuntime () |

Print the statistic information of runtime in the console, see function EnableRecordTimeOfRuntime() for more detail. | |

| virtual bool | EnabledRecordTimeOfRuntime () |

Check if the EnableRecordTimeOfRuntime() method is enabled. | |

| virtual double | GetProfileTime () |

| Get profile time of Runtime after the profile process is done. | |

| virtual void | ReleaseReusedBuffer () |

| Release reused input/output buffers. | |

Additional Inherited Members | |

Public Attributes inherited from fastdeploy::FastDeployModel Public Attributes inherited from fastdeploy::FastDeployModel | |

| std::vector< Backend > | valid_cpu_backends = {Backend::ORT} |

| Model's valid cpu backends. This member defined all the cpu backends have successfully tested for the model. | |

| std::vector< Backend > | valid_gpu_backends = {Backend::ORT} |

| std::vector< Backend > | valid_ipu_backends = {} |

| std::vector< Backend > | valid_timvx_backends = {} |

| std::vector< Backend > | valid_directml_backends = {} |

| std::vector< Backend > | valid_ascend_backends = {} |

| std::vector< Backend > | valid_kunlunxin_backends = {} |

| std::vector< Backend > | valid_rknpu_backends = {} |

| std::vector< Backend > | valid_sophgonpu_backends = {} |

Detailed Description

PaddleClas serials model object used when to load a PaddleClas model exported by PaddleClas repository.

Constructor & Destructor Documentation

◆ PaddleClasModel()

| fastdeploy::vision::classification::PaddleClasModel::PaddleClasModel | ( | const std::string & | model_file, |

| const std::string & | params_file, | ||

| const std::string & | config_file, | ||

| const RuntimeOption & | custom_option = RuntimeOption(), |

||

| const ModelFormat & | model_format = ModelFormat::PADDLE |

||

| ) |

Set path of model file and configuration file, and the configuration of runtime.

- Parameters

-

[in] model_file Path of model file, e.g resnet/model.pdmodel [in] params_file Path of parameter file, e.g resnet/model.pdiparams, if the model format is ONNX, this parameter will be ignored [in] config_file Path of configuration file for deployment, e.g resnet/infer_cfg.yml [in] custom_option RuntimeOption for inference, the default will use cpu, and choose the backend defined in valid_cpu_backends[in] model_format Model format of the loaded model, default is Paddle format

Member Function Documentation

◆ BatchPredict() [1/2]

|

virtual |

Predict the classification results for a batch of input images.

- Parameters

-

[in] imgs,The input image list, each element comes from cv::imread() [in] results The output classification result list

- Returns

- true if the prediction successed, otherwise false

◆ BatchPredict() [2/2]

|

virtual |

Predict the classification results for a batch of input images.

- Parameters

-

[in] mats,The input mat list [in] results The output classification result list

- Returns

- true if the prediction successed, otherwise false

◆ Clone()

|

virtual |

Clone a new PaddleClasModel with less memory usage when multiple instances of the same model are created.

- Returns

- new PaddleClasModel* type unique pointer

◆ Predict() [1/3]

|

virtual |

DEPRECATED Predict the classification result for an input image, remove at 1.0 version.

- Parameters

-

[in] im The input image data, comes from cv::imread() [in] result The output classification result will be writen to this structure

- Returns

- true if the prediction successed, otherwise false

◆ Predict() [2/3]

|

virtual |

Predict the classification result for an input image.

- Parameters

-

[in] img The input image data, comes from cv::imread() [in] result The output classification result

- Returns

- true if the prediction successed, otherwise false

◆ Predict() [3/3]

|

virtual |

Predict the classification result for an input image.

- Parameters

-

[in] mat The input mat [in] result The output classification result

- Returns

- true if the prediction successed, otherwise false

The documentation for this class was generated from the following files:

- /fastdeploy/my_work/FastDeploy/fastdeploy/vision/classification/ppcls/model.h

- /fastdeploy/my_work/FastDeploy/fastdeploy/vision/classification/ppcls/model.cc

1.8.13

1.8.13