Runtime object used to inference the loaded model on different devices. More...

#include <runtime.h>

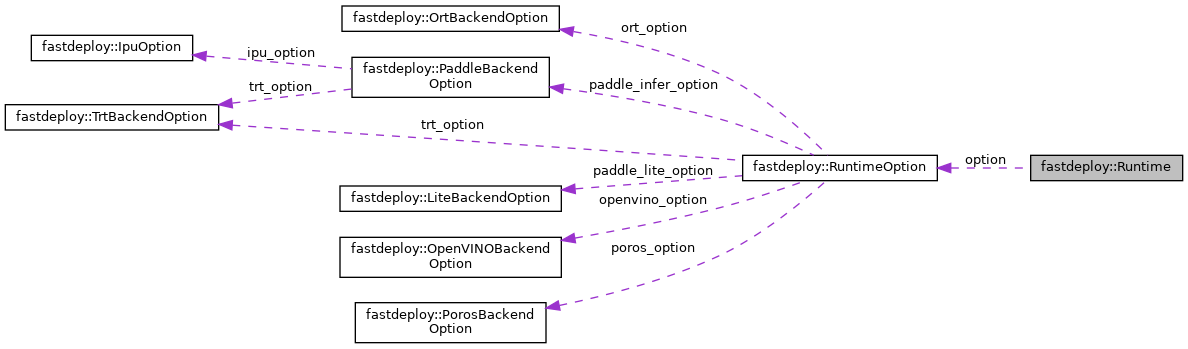

Collaboration diagram for fastdeploy::Runtime:

Public Member Functions | |

| bool | Init (const RuntimeOption &_option) |

| Intialize a Runtime object with RuntimeOption. | |

| bool | Infer (std::vector< FDTensor > &input_tensors, std::vector< FDTensor > *output_tensors) |

| Inference the model by the input data, and write to the output. More... | |

| bool | Infer () |

| No params inference the model. More... | |

| int | NumInputs () |

| Get number of inputs. | |

| int | NumOutputs () |

| Get number of outputs. | |

| TensorInfo | GetInputInfo (int index) |

| Get input information by index. | |

| TensorInfo | GetOutputInfo (int index) |

| Get output information by index. | |

| std::vector< TensorInfo > | GetInputInfos () |

| Get all the input information. | |

| std::vector< TensorInfo > | GetOutputInfos () |

| Get all the output information. | |

| void | BindInputTensor (const std::string &name, FDTensor &input) |

| Bind FDTensor by name, no copy and share input memory. | |

| void | BindOutputTensor (const std::string &name, FDTensor &output) |

| Bind FDTensor by name, no copy and share output memory. Please make share the correctness of tensor shape of output. | |

| FDTensor * | GetOutputTensor (const std::string &name) |

| Get output FDTensor by name, no copy and share backend output memory. | |

| Runtime * | Clone (void *stream=nullptr, int device_id=-1) |

| Clone new Runtime when multiple instances of the same model are created. More... | |

| bool | Compile (std::vector< std::vector< FDTensor >> &prewarm_tensors) |

| Compile TorchScript Module, only for Poros backend. More... | |

| double | GetProfileTime () |

| Get profile time of Runtime after the profile process is done. | |

Detailed Description

Runtime object used to inference the loaded model on different devices.

Member Function Documentation

◆ Clone()

| Runtime * fastdeploy::Runtime::Clone | ( | void * | stream = nullptr, |

| int | device_id = -1 |

||

| ) |

Clone new Runtime when multiple instances of the same model are created.

- Parameters

-

[in] stream CUDA Stream, defualt param is nullptr

- Returns

- new Runtime* by this clone

◆ Compile()

| bool fastdeploy::Runtime::Compile | ( | std::vector< std::vector< FDTensor >> & | prewarm_tensors | ) |

Compile TorchScript Module, only for Poros backend.

- Parameters

-

[in] prewarm_tensors Prewarm datas for compile

- Returns

- true if compile successed, otherwise false

◆ Infer() [1/2]

| bool fastdeploy::Runtime::Infer | ( | std::vector< FDTensor > & | input_tensors, |

| std::vector< FDTensor > * | output_tensors | ||

| ) |

Inference the model by the input data, and write to the output.

- Parameters

-

[in] input_tensors Notice the FDTensor::name should keep same with the model's input [in] output_tensors Inference results

- Returns

- true if the inference successed, otherwise false

◆ Infer() [2/2]

| bool fastdeploy::Runtime::Infer | ( | ) |

No params inference the model.

the input and output data need to pass through the BindInputTensor and GetOutputTensor interfaces.

The documentation for this struct was generated from the following files:

- /fastdeploy/my_work/FastDeploy/fastdeploy/runtime/runtime.h

- /fastdeploy/my_work/FastDeploy/fastdeploy/runtime/runtime.cc

1.8.13

1.8.13