Option object used when create a new Runtime object. More...

#include <runtime_option.h>

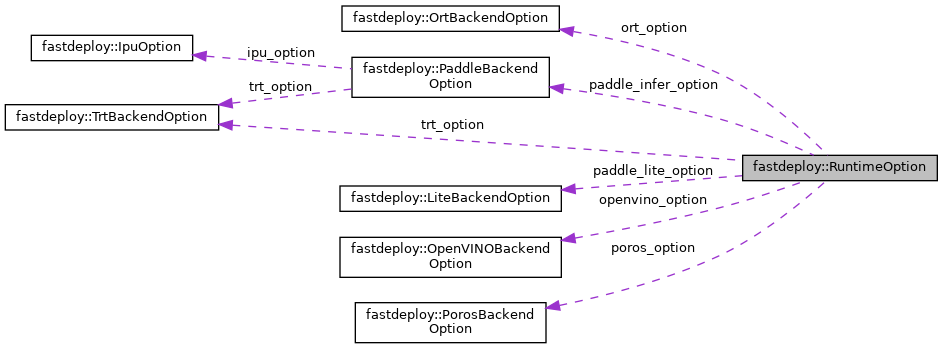

Collaboration diagram for fastdeploy::RuntimeOption:

Public Member Functions | |

| void | SetModelPath (const std::string &model_path, const std::string ¶ms_path="", const ModelFormat &format=ModelFormat::PADDLE) |

| Set path of model file and parameter file. More... | |

| void | SetModelBuffer (const std::string &model_buffer, const std::string ¶ms_buffer="", const ModelFormat &format=ModelFormat::PADDLE) |

| Specify the memory buffer of model and parameter. Used when model and params are loaded directly from memory. More... | |

| void | SetEncryptionKey (const std::string &encryption_key) |

| When loading encrypted model, encryption_key is required to decrypte model. More... | |

| void | UseCpu () |

| Use cpu to inference, the runtime will inference on CPU by default. | |

| void | UseGpu (int gpu_id=0) |

| Use Nvidia GPU to inference. | |

| void | UseRKNPU2 (fastdeploy::rknpu2::CpuName rknpu2_name=fastdeploy::rknpu2::CpuName::RK356X, fastdeploy::rknpu2::CoreMask rknpu2_core=fastdeploy::rknpu2::CoreMask::RKNN_NPU_CORE_AUTO) |

| Use RKNPU2 e.g RK3588/RK356X to inference. | |

| void | UseTimVX () |

| Use TimVX e.g RV1126/A311D to inference. | |

| void | UseAscend () |

| Use Huawei Ascend to inference. | |

| void | UseDirectML () |

| Use onnxruntime DirectML to inference. | |

| void | UseSophgo () |

| Use Sophgo to inference. | |

| void | UseKunlunXin (int kunlunxin_id=0, int l3_workspace_size=0xfffc00, bool locked=false, bool autotune=true, const std::string &autotune_file="", const std::string &precision="int16", bool adaptive_seqlen=false, bool enable_multi_stream=false) |

| Turn on KunlunXin XPU. More... | |

| void | UsePaddleInferBackend () |

| Set Paddle Inference as inference backend, support CPU/GPU. | |

| void | UseOrtBackend () |

| Set ONNX Runtime as inference backend, support CPU/GPU. | |

| void | UseSophgoBackend () |

| Set SOPHGO Runtime as inference backend, support SOPHGO. | |

| void | UseTrtBackend () |

| Set TensorRT as inference backend, only support GPU. | |

| void | UsePorosBackend () |

| Set Poros backend as inference backend, support CPU/GPU. | |

| void | UseOpenVINOBackend () |

| Set OpenVINO as inference backend, only support CPU. | |

| void | UsePaddleLiteBackend () |

| Set Paddle Lite as inference backend, only support arm cpu. | |

| void | UseIpu (int device_num=1, int micro_batch_size=1, bool enable_pipelining=false, int batches_per_step=1) |

Public Attributes | |

| OrtBackendOption | ort_option |

| Option to configure ONNX Runtime backend. | |

| TrtBackendOption | trt_option |

| Option to configure TensorRT backend. | |

| PaddleBackendOption | paddle_infer_option |

| Option to configure Paddle Inference backend. | |

| PorosBackendOption | poros_option |

| Option to configure Poros backend. | |

| OpenVINOBackendOption | openvino_option |

| Option to configure OpenVINO backend. | |

| LiteBackendOption | paddle_lite_option |

| Option to configure Paddle Lite backend. | |

| RKNPU2BackendOption | rknpu2_option |

| Option to configure RKNPU2 backend. | |

Detailed Description

Option object used when create a new Runtime object.

Member Function Documentation

◆ SetEncryptionKey()

| void fastdeploy::RuntimeOption::SetEncryptionKey | ( | const std::string & | encryption_key | ) |

When loading encrypted model, encryption_key is required to decrypte model.

- Parameters

-

[in] encryption_key The key for decrypting model

◆ SetModelBuffer()

| void fastdeploy::RuntimeOption::SetModelBuffer | ( | const std::string & | model_buffer, |

| const std::string & | params_buffer = "", |

||

| const ModelFormat & | format = ModelFormat::PADDLE |

||

| ) |

Specify the memory buffer of model and parameter. Used when model and params are loaded directly from memory.

- Parameters

-

[in] model_buffer The string of model memory buffer [in] params_buffer The string of parameters memory buffer [in] format Format of the loaded model

◆ SetModelPath()

| void fastdeploy::RuntimeOption::SetModelPath | ( | const std::string & | model_path, |

| const std::string & | params_path = "", |

||

| const ModelFormat & | format = ModelFormat::PADDLE |

||

| ) |

Set path of model file and parameter file.

- Parameters

-

[in] model_path Path of model file, e.g ResNet50/model.pdmodel for Paddle format model / ResNet50/model.onnx for ONNX format model [in] params_path Path of parameter file, this only used when the model format is Paddle, e.g Resnet50/model.pdiparams [in] format Format of the loaded model

◆ UseIpu()

| void fastdeploy::RuntimeOption::UseIpu | ( | int | device_num = 1, |

| int | micro_batch_size = 1, |

||

| bool | enable_pipelining = false, |

||

| int | batches_per_step = 1 |

||

| ) |

Graphcore IPU to inference.

- Parameters

-

[in] device_num the number of IPUs. [in] micro_batch_size the batch size in the graph, only work when graph has no batch shape info. [in] enable_pipelining enable pipelining. [in] batches_per_step the number of batches per run in pipelining.

◆ UseKunlunXin()

| void fastdeploy::RuntimeOption::UseKunlunXin | ( | int | kunlunxin_id = 0, |

| int | l3_workspace_size = 0xfffc00, |

||

| bool | locked = false, |

||

| bool | autotune = true, |

||

| const std::string & | autotune_file = "", |

||

| const std::string & | precision = "int16", |

||

| bool | adaptive_seqlen = false, |

||

| bool | enable_multi_stream = false |

||

| ) |

Turn on KunlunXin XPU.

- Parameters

-

kunlunxin_id the KunlunXin XPU card to use (default is 0). l3_workspace_size The size of the video memory allocated by the l3 cache, the maximum is 16M. locked Whether the allocated L3 cache can be locked. If false, it means that the L3 cache is not locked, and the allocated L3 cache can be shared by multiple models, and multiple models sharing the L3 cache will be executed sequentially on the card. autotune Whether to autotune the conv operator in the model. If true, when the conv operator of a certain dimension is executed for the first time, it will automatically search for a better algorithm to improve the performance of subsequent conv operators of the same dimension. autotune_file Specify the path of the autotune file. If autotune_file is specified, the algorithm specified in the file will be used and autotune will not be performed again. precision Calculation accuracy of multi_encoder adaptive_seqlen Is the input of multi_encoder variable length enable_multi_stream Whether to enable the multi stream of KunlunXin XPU.

The documentation for this struct was generated from the following files:

- /fastdeploy/my_work/FastDeploy/fastdeploy/runtime/runtime_option.h

- /fastdeploy/my_work/FastDeploy/fastdeploy/runtime/runtime_option.cc

1.8.13

1.8.13