ProcessorManager for Preprocess. More...

#include <manager.h>

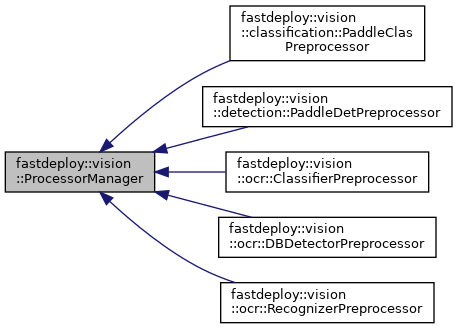

Inheritance diagram for fastdeploy::vision::ProcessorManager:

Public Member Functions | |

| void | UseCuda (bool enable_cv_cuda=false, int gpu_id=-1) |

| Use CUDA to boost the performance of processors. More... | |

| bool | Run (std::vector< FDMat > *images, std::vector< FDTensor > *outputs) |

| Process the input images and prepare input tensors for runtime. More... | |

| virtual bool | Apply (FDMatBatch *image_batch, std::vector< FDTensor > *outputs)=0 |

| Apply() is the body of Run() function, it needs to be implemented by a derived class. More... | |

Detailed Description

ProcessorManager for Preprocess.

Member Function Documentation

◆ Apply()

|

pure virtual |

Apply() is the body of Run() function, it needs to be implemented by a derived class.

- Parameters

-

[in] image_batch The input image batch [in] outputs The output tensors which will feed in runtime

- Returns

- true if the preprocess successed, otherwise false

Implemented in fastdeploy::vision::ocr::RecognizerPreprocessor, fastdeploy::vision::ocr::ClassifierPreprocessor, fastdeploy::vision::detection::PaddleDetPreprocessor, fastdeploy::vision::classification::PaddleClasPreprocessor, and fastdeploy::vision::ocr::DBDetectorPreprocessor.

◆ Run()

| bool fastdeploy::vision::ProcessorManager::Run | ( | std::vector< FDMat > * | images, |

| std::vector< FDTensor > * | outputs | ||

| ) |

Process the input images and prepare input tensors for runtime.

- Parameters

-

[in] images The input image data list, all the elements are returned by cv::imread() [in] outputs The output tensors which will feed in runtime

- Returns

- true if the preprocess successed, otherwise false

◆ UseCuda()

| void fastdeploy::vision::ProcessorManager::UseCuda | ( | bool | enable_cv_cuda = false, |

| int | gpu_id = -1 |

||

| ) |

Use CUDA to boost the performance of processors.

- Parameters

-

[in] enable_cv_cuda ture: use CV-CUDA, false: use CUDA only [in] gpu_id GPU device id

- Returns

- true if the preprocess successed, otherwise false

The documentation for this class was generated from the following files:

- /fastdeploy/my_work/FastDeploy/fastdeploy/vision/common/processors/manager.h

- /fastdeploy/my_work/FastDeploy/fastdeploy/vision/common/processors/manager.cc

1.8.13

1.8.13