项目方案

5)通过pygame的UI显示按键效果,并播放对应的琴键音。

项目特点

3)使用生产者-消费者模式,充分利用Python的多进程,实现高效实时的画面显示、模型推理及结果反馈,在端侧实现较好的体验。

模型推理

模型推理

硬件准备—摄像头布置

硬件准备—摄像头布置

摄像头可选用普通USB网络摄像头,最好清晰度高一点

算法选型

算法选型

hand_pose_localization模型

https://www.paddlepaddle.org.cn/hubdetail?name=hand_pose_localization&en_category=KeyPointDetection

飞桨实现手部21个关键点检测模型

基于CenterNet的手部关键点模型

基于CenterNet模型的魔改

基于上述模型情况,自己使用飞桨框架魔改了一版CenterNet关键点模型,添加了基于heatmap识别landmark的分支。本方案类似于DeepFashion2的冠军方案,如下图所示,DeepFashion2的方案基于CenterNet上添加了Keypoint识别。本方案与之类似,由于任务相对简单,并不需要求出bbox,因此删减了Object size的回归。具体代码实现将会公开在AI Studio项目。

训练数据

训练数据

训练数据集来自于Eric.Lee的handpose_datasets_v2数据集,在handpose_datasets_v1的基础上增加了左右手属性"handType": "Left" or "Right",数据总量为38w+。

程序运行流程

程序运行流程

输入模块(生产者)

手部关键点预测模块(消费者)

主UI模块

import pygamefrom pygame.localsimport *

from sys

import exitimport sys

pathDict={}

pathDict[

'hand']=

'../HandKeypoints/'

for path

in pathDict.values():

sys.path.append(path)

import cv2import timefrom collections

import dequefrom PIL importImageimport tracebackfrom multiprocessing

import Queue,Processfrom ModuleSound

import effectDict

# from ModuleHand import handKeypointsimport CVTools as CVTimport GameTools as GTfrom ModuleConsumer import FrameConsumer

from predict7

import CenterNetfrom ModuleInput

import FrameProducerimport numpy

as np

pygame.init()

defframeShow(frame,screen):

#

# timeStamp = cap.get(cv2.CAP_PROP_POS_MSEC)

# frame = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)

frame=np.array(frame)[:,:,::

-1]

#print('frame',frame.shape)

frame = cv2.transpose(frame)

frame = pygame.surfarray.make_surface(frame)

screen.blit(frame, (

0,

0))

pygame.display.update()

# return timeStamp

defresetKeyboardPos(ftR,thresholdY):

print(

'key SPACE',ftR)

iflen(ftR)>

0:

ftR=np.array(ftR)

avrR=np.average(ftR[:,

1])

thresholdY=int(avrR)

print(

'reset thresholdY',thresholdY)

return thresholdY

defkeyboardResponse(prodecer,ftR,thresholdY):

for event

in pygame.event.get():

if event.type == pygame.QUIT:

prodecer.runFlag =

False

exit()

elif event.type == pygame.MOUSEBUTTONUP:

thresholdY=resetKeyboardPos(ftR,thresholdY)

elif event.type == pygame.KEYDOWN:

if event.key == pygame.K_ESCAPE:

prodecer.runFlag =

False

exit()

elif event.key == pygame.K_SPACE:

thresholdY=resetKeyboardPos(ftR,thresholdY)

return thresholdYdefloopRun(dataQueue,wSize,hSize,prodecer,consumer,thresholdY,movieDict,skipFrame):

# tip position of hand down

ftDown1={}

ftDown2={}

# tip position for now

ft1={}

ft2={}

# tip position of hand up

ftUp1={}

ftUp2={}

#

stageR=

-1

stageL=

-1

resXR=

-1

resXL=

-1

idsR=

-1

idsL=

-1

#

biasDict1={}

biasDict2={}

screen = pygame.display.set_mode((wSize,hSize))

# cap = cv2.VideoCapture(path)

num=

-1

keyNums=

12

biasy=

20

result={}

whileTrue:

##

FPS=prodecer.fps/skipFrame

if FPS >

0:

videoFlag =

True

else:

videoFlag =

False

##

##

# print('ppp', len(dataDeque), len(result))

if dataQueue.qsize()==

0 :

time.sleep(

0.1)

continue

# print('FPS',FPS)

elif dataQueue.qsize()>

0:

##

image=dataQueue.get()

## flir left right:

image=image[:,::

-1,:]

result=consumer.process(image,thresholdY)

resImage=result[

'image']

ftR=result[

'fringerTip1']

ftL=result[

'fringerTip2']

#print('resImage',resImage.size)

thresholdY=keyboardResponse(prodecer, ftR,thresholdY)

if videoFlag:

num +=

1

if num ==

0:

T0 = time.time()

print(

'T0',T0,num*(

1./FPS))

try:

resImage = GT.uiProcess(resImage,ftR,ftL,biasy)

except Exception

as e:

traceback.print_exc()

try:

fringerR,keyIndexR,stageR=GT.pressDetect(ftR,stageR,thresholdY,biasy,wSize,keyNums)

fringerL,keyIndexL,stageL=GT.pressDetect(ftL,stageL,thresholdY,biasy,wSize,keyNums)

#print('resR',idsR,resR,idsL,resL)

except Exception

as e:

traceback.print_exc()

#

GT.soundPlay(effectDict,keyIndexR)

GT.soundPlay(effectDict,keyIndexL)

#

resImage=GT.moviePlay(movieDict,keyIndexR,resImage,thresholdY)

resImage=GT.moviePlay(movieDict,keyIndexL,resImage,thresholdY)

frameShow(resImage, screen)

#clear result

result={}

if __name__==

'__main__':

link=

0

wSize=

640

hSize=

480

skipFrmae=

2

dataQueue = Queue(maxsize=

2)

resultDeque = Queue()

thresholdY=

250

producer = FrameProducer(dataQueue, link)

##

frontPIL=Image.open(

'pianoPic/pianobg.png')

handkeypoint=CenterNet(folderPath=

'/home/sig/sig_dir/program/HandKeypoints/')

consumer=FrameConsumer(dataQueue,resultDeque,handkeypoint,frontPIL)

producer.start()

#

moviePicPath=

'pianoPic/'

movieDict=GT.loadMovieDict(moviePicPath)

#

loopRun(dataQueue, wSize, hSize, producer,consumer,thresholdY,movieDict,skipFrmae)

部署

部署

下载模型代码

下载模型代码

X86 / X64系统上运行

X86 / X64系统上运行

进入HandPiano文件夹,运行python main.py程序即可。

在ARM设备,如Jetson NX上运行

在ARM设备,如Jetson NX上运行

下载 Paddle Inference库并安装

解压data中的压缩包到目录中

进入 HandPiano 文件夹,python main.py运行程序即可

运行

运行

效果展示

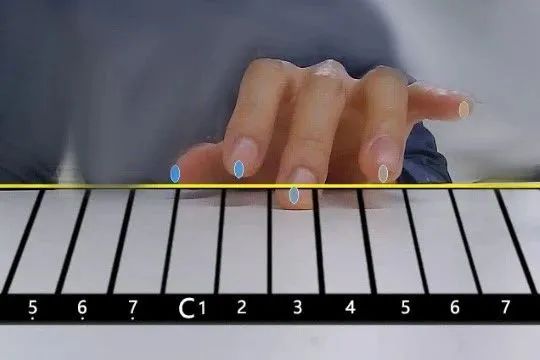

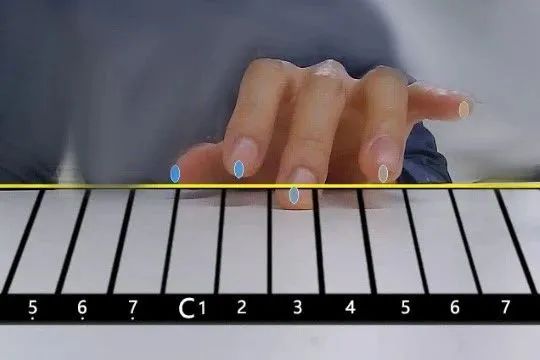

一只手的五指放于桌面上,当5个手指点的圆形都出现后,点击鼠标左键进行“点击位置校准”。

“点击位置校准”后,会调整琴键UI位置,黄线会在指尖所成的直线处。

当指尖的点越过黄线进入琴键位置后即触发该琴键的声音。

项目方案

5)通过pygame的UI显示按键效果,并播放对应的琴键音。

项目特点

3)使用生产者-消费者模式,充分利用Python的多进程,实现高效实时的画面显示、模型推理及结果反馈,在端侧实现较好的体验。

模型推理

模型推理

硬件准备—摄像头布置

硬件准备—摄像头布置

摄像头可选用普通USB网络摄像头,最好清晰度高一点

算法选型

算法选型

hand_pose_localization模型

https://www.paddlepaddle.org.cn/hubdetail?name=hand_pose_localization&en_category=KeyPointDetection

飞桨实现手部21个关键点检测模型

基于CenterNet的手部关键点模型

基于CenterNet模型的魔改

基于上述模型情况,自己使用飞桨框架魔改了一版CenterNet关键点模型,添加了基于heatmap识别landmark的分支。本方案类似于DeepFashion2的冠军方案,如下图所示,DeepFashion2的方案基于CenterNet上添加了Keypoint识别。本方案与之类似,由于任务相对简单,并不需要求出bbox,因此删减了Object size的回归。具体代码实现将会公开在AI Studio项目。

训练数据

训练数据

训练数据集来自于Eric.Lee的handpose_datasets_v2数据集,在handpose_datasets_v1的基础上增加了左右手属性"handType": "Left" or "Right",数据总量为38w+。

程序运行流程

程序运行流程

输入模块(生产者)

手部关键点预测模块(消费者)

主UI模块

import pygamefrom pygame.localsimport *

from sys

import exitimport sys

pathDict={}

pathDict[

'hand']=

'../HandKeypoints/'

for path

in pathDict.values():

sys.path.append(path)

import cv2import timefrom collections

import dequefrom PIL importImageimport tracebackfrom multiprocessing

import Queue,Processfrom ModuleSound

import effectDict

# from ModuleHand import handKeypointsimport CVTools as CVTimport GameTools as GTfrom ModuleConsumer import FrameConsumer

from predict7

import CenterNetfrom ModuleInput

import FrameProducerimport numpy

as np

pygame.init()

defframeShow(frame,screen):

#

# timeStamp = cap.get(cv2.CAP_PROP_POS_MSEC)

# frame = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)

frame=np.array(frame)[:,:,::

-1]

#print('frame',frame.shape)

frame = cv2.transpose(frame)

frame = pygame.surfarray.make_surface(frame)

screen.blit(frame, (

0,

0))

pygame.display.update()

# return timeStamp

defresetKeyboardPos(ftR,thresholdY):

print(

'key SPACE',ftR)

iflen(ftR)>

0:

ftR=np.array(ftR)

avrR=np.average(ftR[:,

1])

thresholdY=int(avrR)

print(

'reset thresholdY',thresholdY)

return thresholdY

defkeyboardResponse(prodecer,ftR,thresholdY):

for event

in pygame.event.get():

if event.type == pygame.QUIT:

prodecer.runFlag =

False

exit()

elif event.type == pygame.MOUSEBUTTONUP:

thresholdY=resetKeyboardPos(ftR,thresholdY)

elif event.type == pygame.KEYDOWN:

if event.key == pygame.K_ESCAPE:

prodecer.runFlag =

False

exit()

elif event.key == pygame.K_SPACE:

thresholdY=resetKeyboardPos(ftR,thresholdY)

return thresholdYdefloopRun(dataQueue,wSize,hSize,prodecer,consumer,thresholdY,movieDict,skipFrame):

# tip position of hand down

ftDown1={}

ftDown2={}

# tip position for now

ft1={}

ft2={}

# tip position of hand up

ftUp1={}

ftUp2={}

#

stageR=

-1

stageL=

-1

resXR=

-1

resXL=

-1

idsR=

-1

idsL=

-1

#

biasDict1={}

biasDict2={}

screen = pygame.display.set_mode((wSize,hSize))

# cap = cv2.VideoCapture(path)

num=

-1

keyNums=

12

biasy=

20

result={}

whileTrue:

##

FPS=prodecer.fps/skipFrame

if FPS >

0:

videoFlag =

True

else:

videoFlag =

False

##

##

# print('ppp', len(dataDeque), len(result))

if dataQueue.qsize()==

0 :

time.sleep(

0.1)

continue

# print('FPS',FPS)

elif dataQueue.qsize()>

0:

##

image=dataQueue.get()

## flir left right:

image=image[:,::

-1,:]

result=consumer.process(image,thresholdY)

resImage=result[

'image']

ftR=result[

'fringerTip1']

ftL=result[

'fringerTip2']

#print('resImage',resImage.size)

thresholdY=keyboardResponse(prodecer, ftR,thresholdY)

if videoFlag:

num +=

1

if num ==

0:

T0 = time.time()

print(

'T0',T0,num*(

1./FPS))

try:

resImage = GT.uiProcess(resImage,ftR,ftL,biasy)

except Exception

as e:

traceback.print_exc()

try:

fringerR,keyIndexR,stageR=GT.pressDetect(ftR,stageR,thresholdY,biasy,wSize,keyNums)

fringerL,keyIndexL,stageL=GT.pressDetect(ftL,stageL,thresholdY,biasy,wSize,keyNums)

#print('resR',idsR,resR,idsL,resL)

except Exception

as e:

traceback.print_exc()

#

GT.soundPlay(effectDict,keyIndexR)

GT.soundPlay(effectDict,keyIndexL)

#

resImage=GT.moviePlay(movieDict,keyIndexR,resImage,thresholdY)

resImage=GT.moviePlay(movieDict,keyIndexL,resImage,thresholdY)

frameShow(resImage, screen)

#clear result

result={}

if __name__==

'__main__':

link=

0

wSize=

640

hSize=

480

skipFrmae=

2

dataQueue = Queue(maxsize=

2)

resultDeque = Queue()

thresholdY=

250

producer = FrameProducer(dataQueue, link)

##

frontPIL=Image.open(

'pianoPic/pianobg.png')

handkeypoint=CenterNet(folderPath=

'/home/sig/sig_dir/program/HandKeypoints/')

consumer=FrameConsumer(dataQueue,resultDeque,handkeypoint,frontPIL)

producer.start()

#

moviePicPath=

'pianoPic/'

movieDict=GT.loadMovieDict(moviePicPath)

#

loopRun(dataQueue, wSize, hSize, producer,consumer,thresholdY,movieDict,skipFrmae)

部署

部署

下载模型代码

下载模型代码

X86 / X64系统上运行

X86 / X64系统上运行

进入HandPiano文件夹,运行python main.py程序即可。

在ARM设备,如Jetson NX上运行

在ARM设备,如Jetson NX上运行

下载 Paddle Inference库并安装

解压data中的压缩包到目录中

进入 HandPiano 文件夹,python main.py运行程序即可

运行

运行

效果展示

一只手的五指放于桌面上,当5个手指点的圆形都出现后,点击鼠标左键进行“点击位置校准”。

“点击位置校准”后,会调整琴键UI位置,黄线会在指尖所成的直线处。

当指尖的点越过黄线进入琴键位置后即触发该琴键的声音。