今年11月的WAVE SUMMIT+2022峰会也将展示30余个开源展示项目,覆盖智慧城市、体育、趣味互动等产业应用。通过市集系列文章,我们先一探究竟~

今天将由飞桨开发者技术专家张一乔介绍“美甲预览机”项目。

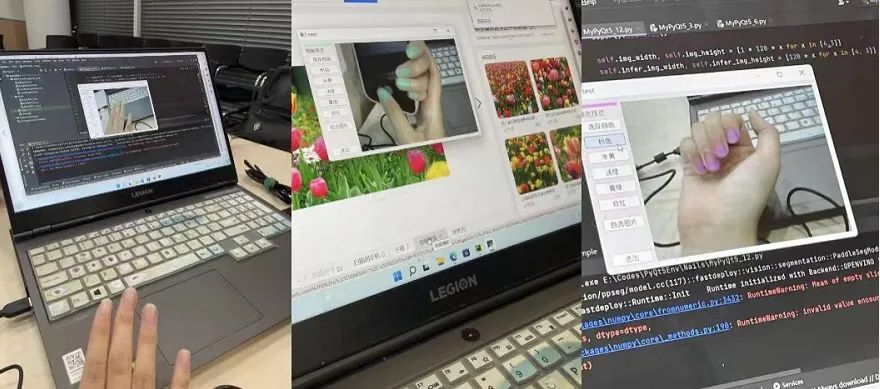

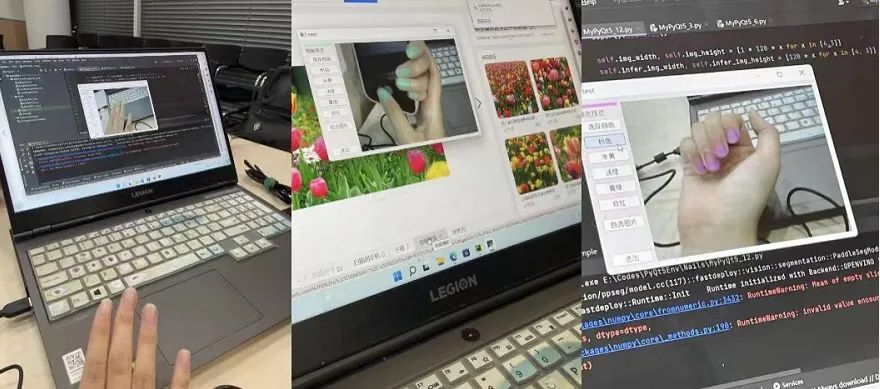

项目效果

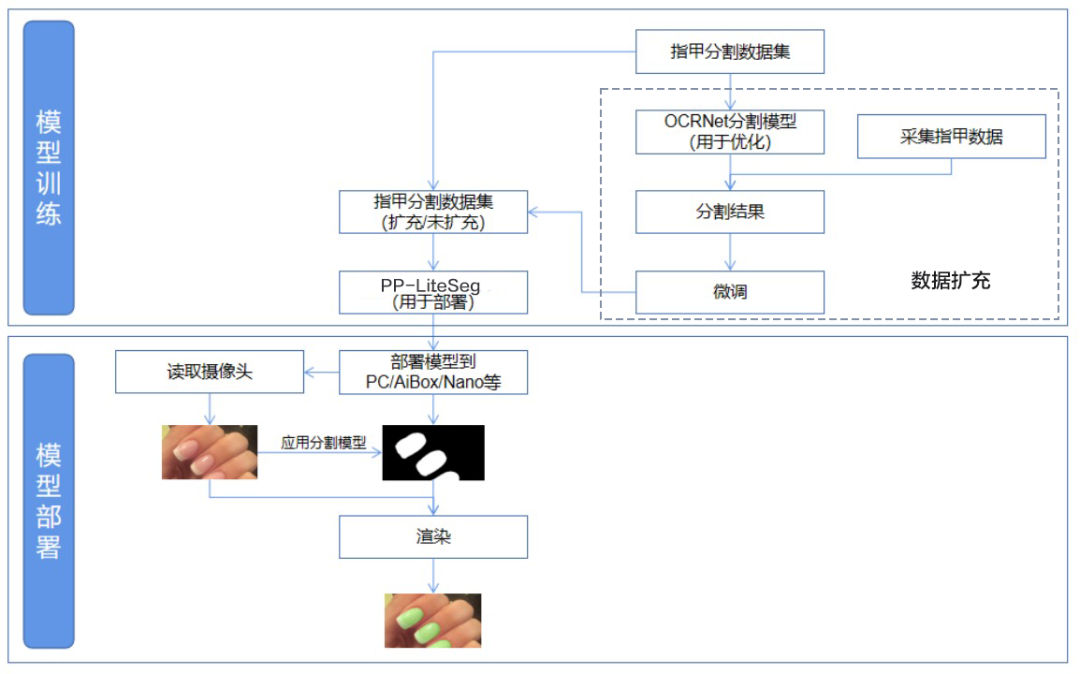

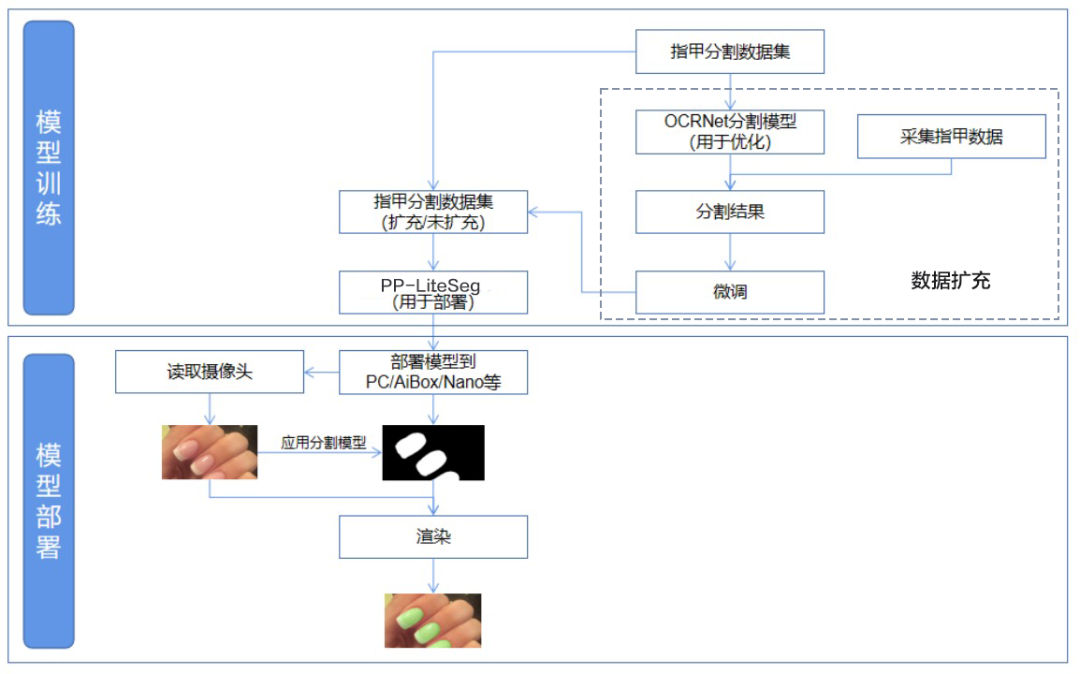

项目流程图

技术细节

开发环境准备

pip install fastdeploy-python -f https://www.paddlepaddle.org.cn/whl/fastdeploy.html

更多支持模型和安装(如GPU版本)请参考

训练分割模型

首先,训练一个指甲分割模型,基础数据集的下载链接为

获得数据后,按照下述格式写入txt文档

batch_size: 8

# 配置批大小和迭代次数

iters: 5000

train_dataset:

# 设置训练集路径,图像增强方法仅包含随机裁剪/缩放/调整明暗度和归一

type: Dataset

dataset_root: /home/aistudio

train_path: /home/aistudio/train.txt

num_classes: 2

mode: train

transforms:

-

type: RandomPaddingCrop

crop_size: [480, 360]

-

type: Resize

target_size: [480, 360]

-

type: RandomHorizontalFlip

-

type: RandomDistort

brightness_range: 0.5

contrast_range: 0.5

saturation_range: 0.5

-

type: Normalize

val_dataset:

# 设置验证集

type: Dataset

dataset_root: /home/aistudio

val_path: /home/aistudio/val.txt

num_classes: 2

mode: val

transforms:

-

type: Normalize

optimizer:

# 设置优化器

type: sgd

momentum: 0.9

weight_decay: 4.0e-5

lr_scheduler:

# 设置学习率

type: PolynomialDecay

learning_rate: 0.01

end_lr: 0

power: 0.9

loss:

# 使用交叉熵损失

types:

-

type: CrossEntropyLoss

coef: [1, 1, 1]

model:

# 选择模型为PPLiteSeg

type: PPLiteSeg

backbone:

type: STDC2

pretrained: https://bj.bcebos.com/paddleseg/dygraph/PP_STDCNet2.tar.gz

%cd ~/PaddleSeg

! export CUDA_VISIBLE_DEVICES=0

! python train.py \

--config ~/myconfig.yml \

--save_interval 2500 \

--do_eval \

--use_vdl \

--save_dir output

训练好的模型保存在~/PaddleSeg/output/best_model/中。最后,将训练后的模型导出成预测模型,用于后续部署。

! python export.py \

--config ~/myconfig.yml \

--model_path output/best_model/model.pdparams \

--save_dir ~/infer_model

数据扩充

训练OCRNet模型

采集包含指甲的图片

使用OCRNet对图片进行预标注

对预测后的图片进行人工微调

修图完成后,你就拥有了一份更大的训练数据,就可以训练一个更好的分割网络啦

美甲渲染

对于某个特定的像素点

Color=Base_Color+Influence

Target_Color=Target_Base_Color+Influence

PyQt5封装

首先,导入需要使用的包

import sys

import cv2

import numpy

as np

import math

import time

import collections

import os

import sys

from PyQt5.QtWidgets

import QWidget, QDesktopWidget, QApplication, QPushButton, QFileDialog, QLabel, QTextEdit, \

QGridLayout, QFrame, QColorDialog

from PyQt5.QtCore

import QTimer

from PyQt5.QtGui

import QColor, QImage, QPixmap

import fastdeploy

之后继承QWidget模块,创建一个class类,比如起名为Example

class Example(QWidget):

首先进行初始化,包括界面内容和模型内容等

def __init__(self):

super().__init_

_()

w, h = [QApplication.desktop().screenGeometry().width() -

800,

QApplication.desktop().screenGeometry().height() -

800]

# w = min(w, int(h/3*4))

self.img_width,

self.img_height = [w, int(w/

4*

3)]

self.infer_img_width,

self.infer_img_height = [

120 * x

for x

in [

4,

3]]

self.color = QColor()

self.color.setHsv(

300,

85,

255)

self.ifpic =

0

self.initCamera()

self.initUI()

self.initModel()

# 初始化Camera相关信息

def initCamera(self):

# 开启视频通道

self.camera_id =

0

# 为0时表示视频流来自摄像头

self.camera = cv2.VideoCapture()

# 视频流

self.camera.open(

self.camera_id)

# 通过定时器读取数据

self.flush_clock = QTimer()

# 定义定时器,用于控制显示视频的帧率

self.flush_clock.start(

60)

# 定时器开始计时60ms,结果是每过60ms从摄像头中取一帧显示

self.flush_clock.timeout.connect(

self.show_frame)

# 若定时器结束,show_frame()

def initUI(self):

grid = QGridLayout()

self.setLayout(grid)

self.Color_Frame = QFrame(

self)

self.Color_Frame.setStyleSheet(

"QWidget { background-color: %s }" %

self.color.name())

grid.addWidget(

self.Color_Frame,

0,

0,

6,

1)

ColorText = QLabel(

'颜色预览',

self)

grid.addWidget(ColorText,

6,

0,

1,

1)

Choose_Color = QPushButton(

'粉色',

self)

grid.addWidget(Choose_Color,

7,

0,

1,

1)

Choose_Color.clicked.connect(

self.choose_color)

Choose_Color = QPushButton(

'米黄',

self)

grid.addWidget(Choose_Color,

8,

0,

1,

1)

Choose_Color.clicked.connect(

self.choose_color)

Choose_Color = QPushButton(

'浅绿',

self)

grid.addWidget(Choose_Color,

9,

0,

1,

1)

Choose_Color.clicked.connect(

self.choose_color)

Choose_Color = QPushButton(

'青绿',

self)

grid.addWidget(Choose_Color,

10,

0,

1,

1)

Choose_Color.clicked.connect(

self.choose_color)

Choose_Color = QPushButton(

'玫红',

self)

grid.addWidget(Choose_Color,

11,

0,

1,

1)

Choose_Color.clicked.connect(

self.choose_color)

Choose_Pic = QPushButton(

'自选图片',

self)

grid.addWidget(Choose_Pic,

13,

0,

1,

1)

Choose_Pic.clicked.connect(

self.showDialog)

Exit_Exe = QPushButton(

'退出',

self)

grid.addWidget(Exit_Exe,

19,

0,

1,

1)

Exit_Exe.clicked.connect(

self.close)

self.Pred_Box = QLabel()

# 定义显示视频的Label

self.Pred_Box.setFixedSize(

self.img_width,

self.img_height)

grid.addWidget(

self.Pred_Box,

0,

1,

20,

20)

# 设置屏幕位置和大小

# self.setGeometry(300, 300, 600, 600)

# 设置屏幕大小

# self.resize(600, 600)

self.setWindowTitle(

'test')

self.show()

# set center

# self.center()

# self.setWindowTitle('Center')

# self.show()

通过initModel创建用于推理的模型

def initModel(self):

# 读取模型

model_dir =

"../infer_model_specify_size/"

self.model = fastdeploy.vision.segmentation.PaddleSegModel(model_dir+

'model.pdmodel',

model_dir+

'model.pdiparams',

model_dir+

'deploy.yaml')

在show_frame中读取摄像头并展示图片。

def show_frame(self):

_, img =

self.camera.read()

# 从视频流中读取

img = cv2.resize(img, (

self.img_width,

self.img_height))

# 把读到的帧的大小重新设置为 640x480

mask, pred =

self.Infer(img)

# 往显示视频的Label里 显示QImage

showImage = QImage(pred, pred.shape[

1], pred.shape[

0], QImage.Format_RGB888)

self.Pred_Box.setPixmap(QPixmap.fromImage(showImage))

通过infer函数进行图片的预处理、推理以及渲染。

def Infer(self, ori_img):

h, w, c = ori_img.shape

img = cv2.resize(ori_img, (

self.infer_img_width,

self.infer_img_height))

pre =

self.model.predict(img)

mask = np.reshape(pre.label_map, pre.shape) *

255

mask = np.array(mask).astype(

'float32')

mask = paddle.vision.resize(mask, [h,w])

# cv2.imwrite('mask.jpg', mask)

hsv_img = cv2.cvtColor(ori_img, cv2.COLOR_BGR2HSV).astype(

'float32')

if

self.ifpic ==

1:

hsv_img[mask >

0,

0] = (hsv_img[mask >

0,

0] - np.median(hsv_img[mask >

0,

0]) +

self.choosed_pic[mask >

0,

0])

hsv_img[mask >

0,

1] = (hsv_img[mask >

0,

1] - np.median(hsv_img[mask >

0,

1]) +

self.choosed_pic[mask >

0,

1])

else:

hsv_img[mask >

0,

0] = hsv_img[mask >

0,

0] +

self.color.getHsv()[

0]/

2 - np.median(hsv_img[mask >

0,

0])

hsv_img[mask >

0,

1] = hsv_img[mask >

0,

1] +

self.color.getHsv()[

1] - np.median(hsv_img[mask >

0,

1])

hsv_img[hsv_img[

:,

:,

0] >

179,

0] =

179

hsv_img[hsv_img[

:,

:,

0] <

1,

0] =

1

hsv_img[hsv_img[

:,

:,

1] >

255,

0] =

255

hsv_img[hsv_img[

:,

:,

1] <

1,

0] =

1

bgr_img = cv2.cvtColor(hsv_img.astype(

'uint8'), cv2.COLOR_HSV2RGB)

# bgr_img = paddle.vision.resize(bgr_img, [h,w])

# cv2.imwrite('result.jpg', bgr_img)

return mask, bgr_img

通过choose_color提供更多的切换颜色的接口。

def choose_color(self):

self.ifpic =

0

# 取消自选图片的状态

if

self.sender().text() ==

'米黄':

# 米黄 有点偏绿 过曝了 # (50, 85, 255)

self.color.setHsv(

40,

30,

255)

elif

self.sender().text() ==

'浅绿':

# 浅绿 还不错

self.color.setHsv(

100,

85,

255)

elif

self.sender().text() ==

'青绿':

# 青绿 还不错

self.color.setHsv(

150,

85,

255)

elif

self.sender().text() ==

'玫红':

# 肉红色 感觉没啥问题就是不太好看

self.color.setHsv(

350,

160,

255)

else:

self.color.setHsv(

300,

85,

255)

# 粉色 标准色贼拉好

self.Color_Frame.setStyleSheet(

"QWidget { background-color: %s }" %

self.color.name())

# print(self.color.name)

def showDialog(self):

fname = QFileDialog.getOpenFileName(

self,

'Open file',

'/home')

try:

self.ifpic =

1

print(fname)

self.choosed_pic = cv2.imread(fname[

0])

self.choosed_pic = cv2.resize(

self.choosed_pic, (

self.img_width,

self.img_height))

self.choosed_pic = cv2.cvtColor(

self.choosed_pic, cv2.COLOR_BGR2HSV).astype(

'float32')

except:

self.ifpic =

0

print(

self.ifpic)

最后,在主函数中调用声明好的类即可

if __name__ ==

'__main__':

app = QApplication(sys.argv)

ex = Example()

sys.

exit(app.exec_())

FastDeploy部署优化

引入包

import fastdeploy

将美甲机代码中initModel函数替换为如下内容

def initModel(self):

# 读取模型

model_dir =

"../infer_model/"

self.model = fastdeploy.vision.segmentation.PaddleSegModel(model_dir+

'model.pdmodel',model_dir+

'model.pdiparams',model_dir+

'deploy.yaml')

将美甲机代码中Infer函数替换为如下内容

def Infer(self, ori_img):

h, w, c = ori_img.shape

img = paddle.vision.resize(ori_img,[

self.infer_img_height,

self.infer_img_width])

pre =

self.model.predict(img)

mask = np.reshape(pre.label_map, pre.shape) *

255

mask = np.array(mask, np.uint8)

mask = paddle.vision.resize(mask, [h,w])

hsv_img = cv2.cvtColor(ori_img, cv2.COLOR_BGR2HSV)

hsv_img[mask >

0,

0] =

self.color.getHsv()[

0]/

2 *

1.1

hsv_img[mask >

0,

1] =

self.color.getHsv()[

1]

bgr_img = cv2.cvtColor(hsv_img, cv2.COLOR_HSV2RGB)

return mask, bgr_img

总结

WAVE SUMMIT+2022

今年11月的WAVE SUMMIT+2022峰会也将展示30余个开源展示项目,覆盖智慧城市、体育、趣味互动等产业应用。通过市集系列文章,我们先一探究竟~

今天将由飞桨开发者技术专家张一乔介绍“美甲预览机”项目。

项目效果

项目流程图

技术细节

开发环境准备

pip install fastdeploy-python -f https://www.paddlepaddle.org.cn/whl/fastdeploy.html

更多支持模型和安装(如GPU版本)请参考

训练分割模型

首先,训练一个指甲分割模型,基础数据集的下载链接为

获得数据后,按照下述格式写入txt文档

batch_size: 8

# 配置批大小和迭代次数

iters: 5000

train_dataset:

# 设置训练集路径,图像增强方法仅包含随机裁剪/缩放/调整明暗度和归一

type: Dataset

dataset_root: /home/aistudio

train_path: /home/aistudio/train.txt

num_classes: 2

mode: train

transforms:

-

type: RandomPaddingCrop

crop_size: [480, 360]

-

type: Resize

target_size: [480, 360]

-

type: RandomHorizontalFlip

-

type: RandomDistort

brightness_range: 0.5

contrast_range: 0.5

saturation_range: 0.5

-

type: Normalize

val_dataset:

# 设置验证集

type: Dataset

dataset_root: /home/aistudio

val_path: /home/aistudio/val.txt

num_classes: 2

mode: val

transforms:

-

type: Normalize

optimizer:

# 设置优化器

type: sgd

momentum: 0.9

weight_decay: 4.0e-5

lr_scheduler:

# 设置学习率

type: PolynomialDecay

learning_rate: 0.01

end_lr: 0

power: 0.9

loss:

# 使用交叉熵损失

types:

-

type: CrossEntropyLoss

coef: [1, 1, 1]

model:

# 选择模型为PPLiteSeg

type: PPLiteSeg

backbone:

type: STDC2

pretrained: https://bj.bcebos.com/paddleseg/dygraph/PP_STDCNet2.tar.gz

%cd ~/PaddleSeg

! export CUDA_VISIBLE_DEVICES=0

! python train.py \

--config ~/myconfig.yml \

--save_interval 2500 \

--do_eval \

--use_vdl \

--save_dir output

训练好的模型保存在~/PaddleSeg/output/best_model/中。最后,将训练后的模型导出成预测模型,用于后续部署。

! python export.py \

--config ~/myconfig.yml \

--model_path output/best_model/model.pdparams \

--save_dir ~/infer_model

数据扩充

训练OCRNet模型

采集包含指甲的图片

使用OCRNet对图片进行预标注

对预测后的图片进行人工微调

修图完成后,你就拥有了一份更大的训练数据,就可以训练一个更好的分割网络啦

美甲渲染

对于某个特定的像素点

Color=Base_Color+Influence

Target_Color=Target_Base_Color+Influence

PyQt5封装

首先,导入需要使用的包

import sys

import cv2

import numpy

as np

import math

import time

import collections

import os

import sys

from PyQt5.QtWidgets

import QWidget, QDesktopWidget, QApplication, QPushButton, QFileDialog, QLabel, QTextEdit, \

QGridLayout, QFrame, QColorDialog

from PyQt5.QtCore

import QTimer

from PyQt5.QtGui

import QColor, QImage, QPixmap

import fastdeploy

之后继承QWidget模块,创建一个class类,比如起名为Example

class Example(QWidget):

首先进行初始化,包括界面内容和模型内容等

def __init__(self):

super().__init_

_()

w, h = [QApplication.desktop().screenGeometry().width() -

800,

QApplication.desktop().screenGeometry().height() -

800]

# w = min(w, int(h/3*4))

self.img_width,

self.img_height = [w, int(w/

4*

3)]

self.infer_img_width,

self.infer_img_height = [

120 * x

for x

in [

4,

3]]

self.color = QColor()

self.color.setHsv(

300,

85,

255)

self.ifpic =

0

self.initCamera()

self.initUI()

self.initModel()

# 初始化Camera相关信息

def initCamera(self):

# 开启视频通道

self.camera_id =

0

# 为0时表示视频流来自摄像头

self.camera = cv2.VideoCapture()

# 视频流

self.camera.open(

self.camera_id)

# 通过定时器读取数据

self.flush_clock = QTimer()

# 定义定时器,用于控制显示视频的帧率

self.flush_clock.start(

60)

# 定时器开始计时60ms,结果是每过60ms从摄像头中取一帧显示

self.flush_clock.timeout.connect(

self.show_frame)

# 若定时器结束,show_frame()

def initUI(self):

grid = QGridLayout()

self.setLayout(grid)

self.Color_Frame = QFrame(

self)

self.Color_Frame.setStyleSheet(

"QWidget { background-color: %s }" %

self.color.name())

grid.addWidget(

self.Color_Frame,

0,

0,

6,

1)

ColorText = QLabel(

'颜色预览',

self)

grid.addWidget(ColorText,

6,

0,

1,

1)

Choose_Color = QPushButton(

'粉色',

self)

grid.addWidget(Choose_Color,

7,

0,

1,

1)

Choose_Color.clicked.connect(

self.choose_color)

Choose_Color = QPushButton(

'米黄',

self)

grid.addWidget(Choose_Color,

8,

0,

1,

1)

Choose_Color.clicked.connect(

self.choose_color)

Choose_Color = QPushButton(

'浅绿',

self)

grid.addWidget(Choose_Color,

9,

0,

1,

1)

Choose_Color.clicked.connect(

self.choose_color)

Choose_Color = QPushButton(

'青绿',

self)

grid.addWidget(Choose_Color,

10,

0,

1,

1)

Choose_Color.clicked.connect(

self.choose_color)

Choose_Color = QPushButton(

'玫红',

self)

grid.addWidget(Choose_Color,

11,

0,

1,

1)

Choose_Color.clicked.connect(

self.choose_color)

Choose_Pic = QPushButton(

'自选图片',

self)

grid.addWidget(Choose_Pic,

13,

0,

1,

1)

Choose_Pic.clicked.connect(

self.showDialog)

Exit_Exe = QPushButton(

'退出',

self)

grid.addWidget(Exit_Exe,

19,

0,

1,

1)

Exit_Exe.clicked.connect(

self.close)

self.Pred_Box = QLabel()

# 定义显示视频的Label

self.Pred_Box.setFixedSize(

self.img_width,

self.img_height)

grid.addWidget(

self.Pred_Box,

0,

1,

20,

20)

# 设置屏幕位置和大小

# self.setGeometry(300, 300, 600, 600)

# 设置屏幕大小

# self.resize(600, 600)

self.setWindowTitle(

'test')

self.show()

# set center

# self.center()

# self.setWindowTitle('Center')

# self.show()

通过initModel创建用于推理的模型

def initModel(self):

# 读取模型

model_dir =

"../infer_model_specify_size/"

self.model = fastdeploy.vision.segmentation.PaddleSegModel(model_dir+

'model.pdmodel',

model_dir+

'model.pdiparams',

model_dir+

'deploy.yaml')

在show_frame中读取摄像头并展示图片。

def show_frame(self):

_, img =

self.camera.read()

# 从视频流中读取

img = cv2.resize(img, (

self.img_width,

self.img_height))

# 把读到的帧的大小重新设置为 640x480

mask, pred =

self.Infer(img)

# 往显示视频的Label里 显示QImage

showImage = QImage(pred, pred.shape[

1], pred.shape[

0], QImage.Format_RGB888)

self.Pred_Box.setPixmap(QPixmap.fromImage(showImage))

通过infer函数进行图片的预处理、推理以及渲染。

def Infer(self, ori_img):

h, w, c = ori_img.shape

img = cv2.resize(ori_img, (

self.infer_img_width,

self.infer_img_height))

pre =

self.model.predict(img)

mask = np.reshape(pre.label_map, pre.shape) *

255

mask = np.array(mask).astype(

'float32')

mask = paddle.vision.resize(mask, [h,w])

# cv2.imwrite('mask.jpg', mask)

hsv_img = cv2.cvtColor(ori_img, cv2.COLOR_BGR2HSV).astype(

'float32')

if

self.ifpic ==

1:

hsv_img[mask >

0,

0] = (hsv_img[mask >

0,

0] - np.median(hsv_img[mask >

0,

0]) +

self.choosed_pic[mask >

0,

0])

hsv_img[mask >

0,

1] = (hsv_img[mask >

0,

1] - np.median(hsv_img[mask >

0,

1]) +

self.choosed_pic[mask >

0,

1])

else:

hsv_img[mask >

0,

0] = hsv_img[mask >

0,

0] +

self.color.getHsv()[

0]/

2 - np.median(hsv_img[mask >

0,

0])

hsv_img[mask >

0,

1] = hsv_img[mask >

0,

1] +

self.color.getHsv()[

1] - np.median(hsv_img[mask >

0,

1])

hsv_img[hsv_img[

:,

:,

0] >

179,

0] =

179

hsv_img[hsv_img[

:,

:,

0] <

1,

0] =

1

hsv_img[hsv_img[

:,

:,

1] >

255,

0] =

255

hsv_img[hsv_img[

:,

:,

1] <

1,

0] =

1

bgr_img = cv2.cvtColor(hsv_img.astype(

'uint8'), cv2.COLOR_HSV2RGB)

# bgr_img = paddle.vision.resize(bgr_img, [h,w])

# cv2.imwrite('result.jpg', bgr_img)

return mask, bgr_img

通过choose_color提供更多的切换颜色的接口。

def choose_color(self):

self.ifpic =

0

# 取消自选图片的状态

if

self.sender().text() ==

'米黄':

# 米黄 有点偏绿 过曝了 # (50, 85, 255)

self.color.setHsv(

40,

30,

255)

elif

self.sender().text() ==

'浅绿':

# 浅绿 还不错

self.color.setHsv(

100,

85,

255)

elif

self.sender().text() ==

'青绿':

# 青绿 还不错

self.color.setHsv(

150,

85,

255)

elif

self.sender().text() ==

'玫红':

# 肉红色 感觉没啥问题就是不太好看

self.color.setHsv(

350,

160,

255)

else:

self.color.setHsv(

300,

85,

255)

# 粉色 标准色贼拉好

self.Color_Frame.setStyleSheet(

"QWidget { background-color: %s }" %

self.color.name())

# print(self.color.name)

def showDialog(self):

fname = QFileDialog.getOpenFileName(

self,

'Open file',

'/home')

try:

self.ifpic =

1

print(fname)

self.choosed_pic = cv2.imread(fname[

0])

self.choosed_pic = cv2.resize(

self.choosed_pic, (

self.img_width,

self.img_height))

self.choosed_pic = cv2.cvtColor(

self.choosed_pic, cv2.COLOR_BGR2HSV).astype(

'float32')

except:

self.ifpic =

0

print(

self.ifpic)

最后,在主函数中调用声明好的类即可

if __name__ ==

'__main__':

app = QApplication(sys.argv)

ex = Example()

sys.

exit(app.exec_())

FastDeploy部署优化

引入包

import fastdeploy

将美甲机代码中initModel函数替换为如下内容

def initModel(self):

# 读取模型

model_dir =

"../infer_model/"

self.model = fastdeploy.vision.segmentation.PaddleSegModel(model_dir+

'model.pdmodel',model_dir+

'model.pdiparams',model_dir+

'deploy.yaml')

将美甲机代码中Infer函数替换为如下内容

def Infer(self, ori_img):

h, w, c = ori_img.shape

img = paddle.vision.resize(ori_img,[

self.infer_img_height,

self.infer_img_width])

pre =

self.model.predict(img)

mask = np.reshape(pre.label_map, pre.shape) *

255

mask = np.array(mask, np.uint8)

mask = paddle.vision.resize(mask, [h,w])

hsv_img = cv2.cvtColor(ori_img, cv2.COLOR_BGR2HSV)

hsv_img[mask >

0,

0] =

self.color.getHsv()[

0]/

2 *

1.1

hsv_img[mask >

0,

1] =

self.color.getHsv()[

1]

bgr_img = cv2.cvtColor(hsv_img, cv2.COLOR_HSV2RGB)

return mask, bgr_img

总结

WAVE SUMMIT+2022