移动窗口为什么能有全局特征抽取的能力

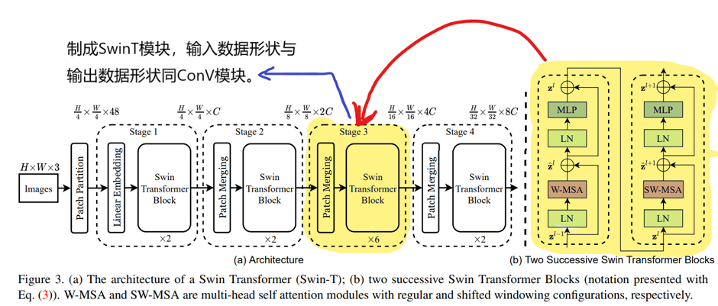

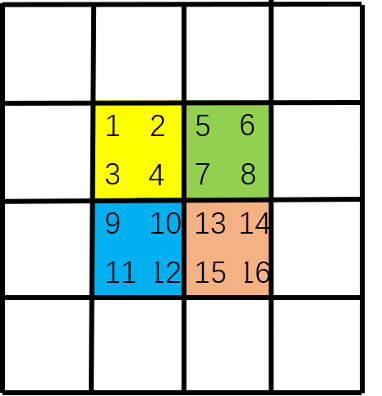

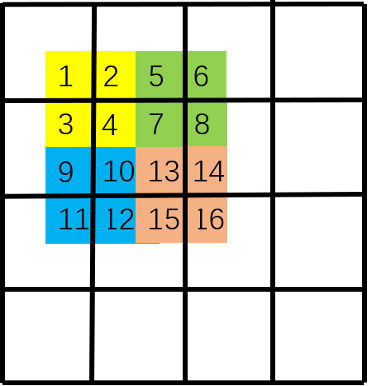

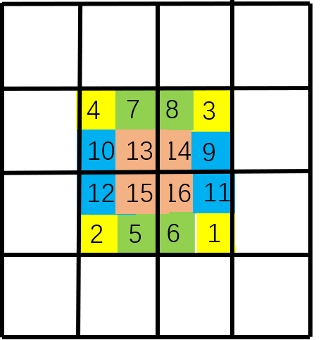

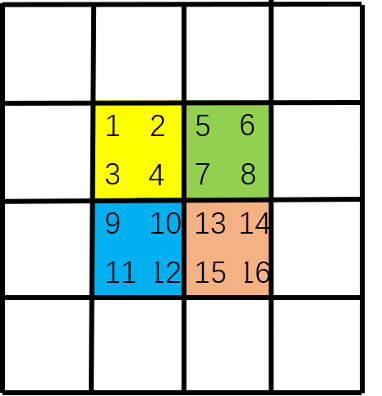

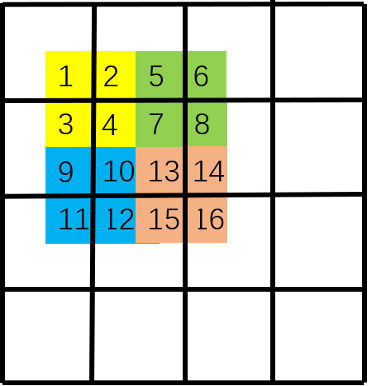

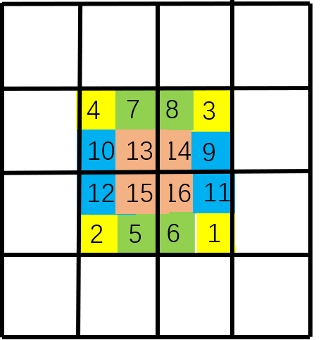

Swin Transformer中注意力机制是如何运行的,如下图。首先,我们对每个颜色内的窗口进行自注意力运算,如[1,2,3,4],[5,6,7,8],[9,10,11,12],[13,14,15,16]每个列表内的元素做自注意力运算。

SwinT接口的使用方式

#导入包,miziha中含有SwinT模块

import paddle

import paddle.nn as nn

import miziha

#创建测试数据

test_data = paddle.ones([2, 96, 224, 224]) #[N, C, H, W]

print(f'输入尺寸:{test_data.shape}')

#创建SwinT层

'''

参数:

in_channels: 输入通道数,同卷积

out_channels: 输出通道数,同卷积

以下为SwinT独有的,类似于卷积中的核大小,步幅,填充等

input_resolution: 输入图像的尺寸大小

num_heads: 多头注意力的头数,应该设置为能被输入通道数整除的值

window_size: 做注意力运算的窗口的大小,窗口越大,运算就会越慢

qkv_bias: qkv的偏置,默认None

qk_scale: qkv的尺度,注意力大小的一个归一化,默认None #Swin-V1版本

dropout: 默认None

attention_dropout: 默认None

droppath: 默认None

downsample: 下采样,默认False,设置为True时,输出的图片大小会变为输入的一半

'''

swint1 = miziha.SwinT(in_channels=96, out_channels=256, input_resolution=(224,224), num_heads=8, window_size=7, downsample=False)

swint2 = miziha.SwinT(in_channels=96, out_channels=256, input_resolution=(224,224), num_heads=8, window_size=7, downsample=True)

conv1 = nn.Conv2D(in_channels=96, out_channels=256, kernel_size=3, stride=1, padding=1)

#前向传播,打印输出形状

output1 = swint1(test_data)

output2 = swint2(test_data)

output3 = conv1(test_data)

print(f'SwinT的输出尺寸:{output1.shape}')

print(f'下采样的SwinT的输出尺寸:{output2.shape}') #下采样

print(f'Conv2D的输出尺寸:{output3.shape}')

输入尺寸:[2, 96, 224, 224]

SwinT的输出尺寸:[2, 256, 224, 224]

下采样的SwinT的输出尺寸:[2, 256, 112, 112]

Conv2D的输出尺寸:[2, 256, 224, 224]使用SwinT替换Resnet中Conv2D模型

创建Swin Resnet并进行测试!

源码链接:

为了展示实际的效果,我们使用Cifar10数据集(这是一个任务较简单且数据较少的数据集)对模型精度,速度两方面给出了结果,证明了SwinT模块在效果上至少是不差于Conv2D的,由于运行整个流程需要6个小时,因此没有过多调节超参数防止过拟合。虽然普通的resnet50可以调高batch来提高速度,但是batch大小是与模型正则化有关的一个参数,因此将batch都控制在了一个大小进行对比测试。

首先创建卷积批归一化块,在resnet50中使用的是batchnorm,而在SwinT模块中已经自带了layernorm,因此这块代码不需要做改动。

# ResNet模型代码

# ResNet中使用了BatchNorm层,在卷积层的后面加上BatchNorm以提升数值稳定性

# 定义卷积批归一化块

class ConvBNLayer(paddle.nn.Layer):

def __init__(self,

num_channels,

num_filters,

filter_size,

stride=1,

groups=1,

act=None):

# num_channels, 卷积层的输入通道数

# num_filters, 卷积层的输出通道数

# stride, 卷积层的步幅

# groups, 分组卷积的组数,默认groups=1不使用分组卷积

super(ConvBNLayer, self).__init__()

# 创建卷积层

self._conv = nn.Conv2D(

in_channels=num_channels,

out_channels=num_filters,

kernel_size=filter_size,

stride=stride,

padding=(filter_size - 1) // 2,

groups=groups,

bias_attr=False)

# 创建BatchNorm层

self._batch_norm = paddle.nn.BatchNorm2D(num_filters)

self.act = act

def forward(self, inputs):

y = self._conv(inputs)

y = self._batch_norm(y)

if self.act == 'leaky':

y = F.leaky_relu(x=y, negative_slope=0.1)

elif self.act == 'relu':

y = F.relu(x=y)

return y

# 定义残差块

# 每个残差块会对输入图片做三次卷积,然后跟输入图片进行短接

# 如果残差块中第三次卷积输出特征图的形状与输入不一致,则对输入图片做1x1卷积,将其输出形状调整成一致

class BottleneckBlock(paddle.nn.Layer):

def __init__(self,

num_channels,

num_filters,

stride,

resolution,

num_heads=8,

window_size=8,

downsample=False,

shortcut=True):

super(BottleneckBlock, self).__init__()

# 创建第一个卷积层 1x1

self.conv0 = ConvBNLayer(

num_channels=num_channels,

num_filters=num_filters,

filter_size=1,

act='relu')

# 创建第二个卷积层 3x3

# self.conv1 = ConvBNLayer(

# num_channels=num_filters,

# num_filters=num_filters,

# filter_size=3,

# stride=stride,

# act='relu')

#如果尺寸为7x7,启动cnn,因为这个大小不容易划分等大小窗口了

# 使用SwinT进行替换,如下

if resolution == (7,7):

self.swin = ConvBNLayer(num_channels=num_filters,

num_filters=num_filters,

filter_size=3,

stride=1,

act='relu')

else:

self.swin = miziha.SwinT(in_channels=num_filters,

out_channels=num_filters,

input_resolution=resolution,

num_heads=num_heads,

window_size=window_size,

downsample=downsample)

# 创建第三个卷积 1x1,但输出通道数乘以4

self.conv2 = ConvBNLayer(

num_channels=num_filters,

num_filters=num_filters * 4,

filter_size=1,

act=None)

# 如果conv2的输出跟此残差块的输入数据形状一致,则shortcut=True

# 否则shortcut = False,添加1个1x1的卷积作用在输入数据上,使其形状变成跟conv2一致

if not shortcut:

self.short = ConvBNLayer(

num_channels=num_channels,

num_filters=num_filters * 4,

filter_size=1,

stride=stride)

self.shortcut = shortcut

self._num_channels_out = num_filters * 4

def forward(self, inputs):

y = self.conv0(inputs)

swin = self.swin(y)

conv2 = self.conv2(swin)

# 如果shortcut=True,直接将inputs跟conv2的输出相加

# 否则需要对inputs进行一次卷积,将形状调整成跟conv2输出一致

if self.shortcut:

short = inputs

else:

short = self.short(inputs)

y = paddle.add(x=short, y=conv2)

y = F.relu(y)

return y

#搭建SwinResnet

class SwinResnet(paddle.nn.Layer):

def __init__(self, num_classes=12):

super().__init__()

depth = [3, 4, 6, 3]

# 残差块中使用到的卷积的输出通道数,图片的尺寸信息,多头注意力参数

num_filters = [64, 128, 256, 512]

resolution_list = [[(56,56),(56,56)],[(56,56),(28,28)],[(28,28),[14,14]],[(14,14),(7,7)]]

num_head_list = [4, 8, 16, 32]

# SwinResnet的第一个模块,包含1个7x7卷积,后面跟着1个最大池化层

#[3, 224, 224]

self.conv = ConvBNLayer(

num_channels=3,

num_filters=64,

filter_size=7,

stride=2,

act='relu')

#[64, 112, 112]

self.pool2d_max = nn.MaxPool2D(

kernel_size=3,

stride=2,

padding=1)

#[64, 56, 56]

# SwinResnet的第二到第五个模块c2、c3、c4、c5

self.bottleneck_block_list = []

num_channels = 64

for block in range(len(depth)):

shortcut = False

for i in range(depth[block]):

# c3、c4、c5将会在第一个残差块使用downsample=True;其余所有残差块downsample=False

bottleneck_block = self.add_sublayer(

'bb_%d_%d' % (block, i),

BottleneckBlock(

num_channels=num_channels,

num_filters=num_filters[block],

stride=2 if i == 0 and block != 0 else 1,

downsample=True if i == 0 and block != 0 else False,

num_heads=num_head_list[block],

resolution=resolution_list[block][0] if i == 0 and block != 0 else resolution_list[block][1],

window_size=7,

shortcut=shortcut))

num_channels = bottleneck_block._num_channels_out

self.bottleneck_block_list.append(bottleneck_block)

shortcut = True

# 在c5的输出特征图上使用全局池化

self.pool2d_avg = paddle.nn.AdaptiveAvgPool2D(output_size=1)

# stdv用来作为全连接层随机初始化参数的方差

import math

stdv1 = 1.0 / math.sqrt(2048 * 1.0)

stdv2 = 1.0 / math.sqrt(256 * 1.0)

# 创建全连接层,输出大小为类别数目,经过残差网络的卷积和全局池化后,

# 卷积特征的维度是[B,2048,1,1],故最后一层全连接的输入维度是2048

self.out = nn.Sequential(nn.Dropout(0.2),

nn.Linear(in_features=2048, out_features=256,

weight_attr=paddle.ParamAttr(

initializer=paddle.nn.initializer.Uniform(-stdv1, stdv1))),

nn.LayerNorm(256),

nn.Dropout(0.2),

nn.LeakyReLU(),

nn.Linear(in_features=256,out_features=num_classes,

weight_attr=paddle.ParamAttr(

initializer=paddle.nn.initializer.Uniform(-stdv2, stdv2)))

)

def forward(self, inputs):

y = self.conv(inputs)

y = self.pool2d_max(y)

for bottleneck_block in self.bottleneck_block_list:

y = bottleneck_block(y)

y = self.pool2d_avg(y)

y = paddle.reshape(y, [y.shape[0], -1])

y = self.out(y)

return yMode = 0 #修改此处即可训练三个不同的模型

import paddle

import paddle.nn as nn

from paddle.vision.models import resnet50, vgg16, LeNet

from paddle.vision.datasets import Cifar10

from paddle.optimizer import Momentum

from paddle.regularizer import L2Decay

from paddle.nn import CrossEntropyLoss

from paddle.metric import Accuracy

from paddle.vision.transforms import Transpose, Resize, Compose

from model import SwinResnet

# 确保从paddle.vision.datasets.Cifar10中加载的图像数据是np.ndarray类型

paddle.vision.set_image_backend('cv2')

# 加载模型

resnet = resnet50(pretrained=False, num_classes=10)

import math

stdv1 = 1.0 / math.sqrt(2048 * 1.0)

stdv2 = 1.0 / math.sqrt(256 * 1.0)

#修改resnet最后一层,加强模型拟合能力

resnet.fc = nn.Sequential(nn.Dropout(0.2),

nn.Linear(in_features=2048, out_features=256,

weight_attr=paddle.ParamAttr(

initializer=paddle.nn.initializer.Uniform(-stdv1, stdv1))),

nn.LayerNorm(256),

nn.Dropout(0.2),

nn.LeakyReLU(),

nn.Linear(in_features=256,out_features=10,

weight_attr=paddle.ParamAttr(

initializer=paddle.nn.initializer.Uniform(-stdv2, stdv2)))

)

model = SwinResnet(num_classes=10) if Mode == 0 else resnet

#打包模型

model = paddle.Model(model)

# 创建图像变换

transforms = Compose([Resize((224,224)), Transpose()]) if Mode != 2 else Compose([Resize((32, 32)), Transpose()])

# 使用Cifar10数据集

train_dataset = Cifar10(mode='train', transform=transforms)

valid_dadaset = Cifar10(mode='test', transform=transforms)

# 定义优化器

optimizer = Momentum(learning_rate=0.01,

momentum=0.9,

weight_decay=L2Decay(1e-4),

parameters=model.parameters())

# 进行训练前准备

model.prepare(optimizer, CrossEntropyLoss(), Accuracy(topk=(1, 5)))

# 启动训练

model.fit(train_dataset,

valid_dadaset,

epochs=40,

batch_size=80,

save_dir="./output",

num_workers=8)测试结果分析

以下res224指Resnet50输入图像尺寸为224x224,res32指Resnet50输入图像尺寸为32x32。

SwinT的应用场景

总结

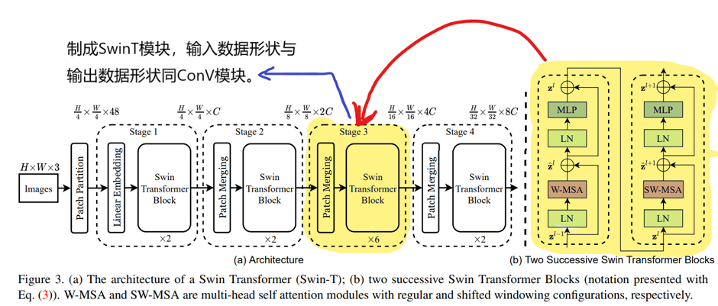

我们将Swin Transformer最核心的模块制作成了SwinT接口,使用形式类似Conv2D。首先,这极大的方便了开发者们进行网络模型的编写,尤其是要自定义模型架构时,并混合使用Conv2D和SwinT;然后,我们认为SwinT接口的内容非常简单并且高效,因此这个接口短期内将不会过时,可以拥有时效性上的保证;最后,我们真实地对该接口进行了测试,证明了该接口的易用性以及精度性能。

相关推荐

关注【飞桨PaddlePaddle】公众号

获取更多技术内容~

移动窗口为什么能有全局特征抽取的能力

Swin Transformer中注意力机制是如何运行的,如下图。首先,我们对每个颜色内的窗口进行自注意力运算,如[1,2,3,4],[5,6,7,8],[9,10,11,12],[13,14,15,16]每个列表内的元素做自注意力运算。

SwinT接口的使用方式

#导入包,miziha中含有SwinT模块

import paddle

import paddle.nn as nn

import miziha

#创建测试数据

test_data = paddle.ones([2, 96, 224, 224]) #[N, C, H, W]

print(f'输入尺寸:{test_data.shape}')

#创建SwinT层

'''

参数:

in_channels: 输入通道数,同卷积

out_channels: 输出通道数,同卷积

以下为SwinT独有的,类似于卷积中的核大小,步幅,填充等

input_resolution: 输入图像的尺寸大小

num_heads: 多头注意力的头数,应该设置为能被输入通道数整除的值

window_size: 做注意力运算的窗口的大小,窗口越大,运算就会越慢

qkv_bias: qkv的偏置,默认None

qk_scale: qkv的尺度,注意力大小的一个归一化,默认None #Swin-V1版本

dropout: 默认None

attention_dropout: 默认None

droppath: 默认None

downsample: 下采样,默认False,设置为True时,输出的图片大小会变为输入的一半

'''

swint1 = miziha.SwinT(in_channels=96, out_channels=256, input_resolution=(224,224), num_heads=8, window_size=7, downsample=False)

swint2 = miziha.SwinT(in_channels=96, out_channels=256, input_resolution=(224,224), num_heads=8, window_size=7, downsample=True)

conv1 = nn.Conv2D(in_channels=96, out_channels=256, kernel_size=3, stride=1, padding=1)

#前向传播,打印输出形状

output1 = swint1(test_data)

output2 = swint2(test_data)

output3 = conv1(test_data)

print(f'SwinT的输出尺寸:{output1.shape}')

print(f'下采样的SwinT的输出尺寸:{output2.shape}') #下采样

print(f'Conv2D的输出尺寸:{output3.shape}')

输入尺寸:[2, 96, 224, 224]

SwinT的输出尺寸:[2, 256, 224, 224]

下采样的SwinT的输出尺寸:[2, 256, 112, 112]

Conv2D的输出尺寸:[2, 256, 224, 224]使用SwinT替换Resnet中Conv2D模型

创建Swin Resnet并进行测试!

源码链接:

为了展示实际的效果,我们使用Cifar10数据集(这是一个任务较简单且数据较少的数据集)对模型精度,速度两方面给出了结果,证明了SwinT模块在效果上至少是不差于Conv2D的,由于运行整个流程需要6个小时,因此没有过多调节超参数防止过拟合。虽然普通的resnet50可以调高batch来提高速度,但是batch大小是与模型正则化有关的一个参数,因此将batch都控制在了一个大小进行对比测试。

首先创建卷积批归一化块,在resnet50中使用的是batchnorm,而在SwinT模块中已经自带了layernorm,因此这块代码不需要做改动。

# ResNet模型代码

# ResNet中使用了BatchNorm层,在卷积层的后面加上BatchNorm以提升数值稳定性

# 定义卷积批归一化块

class ConvBNLayer(paddle.nn.Layer):

def __init__(self,

num_channels,

num_filters,

filter_size,

stride=1,

groups=1,

act=None):

# num_channels, 卷积层的输入通道数

# num_filters, 卷积层的输出通道数

# stride, 卷积层的步幅

# groups, 分组卷积的组数,默认groups=1不使用分组卷积

super(ConvBNLayer, self).__init__()

# 创建卷积层

self._conv = nn.Conv2D(

in_channels=num_channels,

out_channels=num_filters,

kernel_size=filter_size,

stride=stride,

padding=(filter_size - 1) // 2,

groups=groups,

bias_attr=False)

# 创建BatchNorm层

self._batch_norm = paddle.nn.BatchNorm2D(num_filters)

self.act = act

def forward(self, inputs):

y = self._conv(inputs)

y = self._batch_norm(y)

if self.act == 'leaky':

y = F.leaky_relu(x=y, negative_slope=0.1)

elif self.act == 'relu':

y = F.relu(x=y)

return y

# 定义残差块

# 每个残差块会对输入图片做三次卷积,然后跟输入图片进行短接

# 如果残差块中第三次卷积输出特征图的形状与输入不一致,则对输入图片做1x1卷积,将其输出形状调整成一致

class BottleneckBlock(paddle.nn.Layer):

def __init__(self,

num_channels,

num_filters,

stride,

resolution,

num_heads=8,

window_size=8,

downsample=False,

shortcut=True):

super(BottleneckBlock, self).__init__()

# 创建第一个卷积层 1x1

self.conv0 = ConvBNLayer(

num_channels=num_channels,

num_filters=num_filters,

filter_size=1,

act='relu')

# 创建第二个卷积层 3x3

# self.conv1 = ConvBNLayer(

# num_channels=num_filters,

# num_filters=num_filters,

# filter_size=3,

# stride=stride,

# act='relu')

#如果尺寸为7x7,启动cnn,因为这个大小不容易划分等大小窗口了

# 使用SwinT进行替换,如下

if resolution == (7,7):

self.swin = ConvBNLayer(num_channels=num_filters,

num_filters=num_filters,

filter_size=3,

stride=1,

act='relu')

else:

self.swin = miziha.SwinT(in_channels=num_filters,

out_channels=num_filters,

input_resolution=resolution,

num_heads=num_heads,

window_size=window_size,

downsample=downsample)

# 创建第三个卷积 1x1,但输出通道数乘以4

self.conv2 = ConvBNLayer(

num_channels=num_filters,

num_filters=num_filters * 4,

filter_size=1,

act=None)

# 如果conv2的输出跟此残差块的输入数据形状一致,则shortcut=True

# 否则shortcut = False,添加1个1x1的卷积作用在输入数据上,使其形状变成跟conv2一致

if not shortcut:

self.short = ConvBNLayer(

num_channels=num_channels,

num_filters=num_filters * 4,

filter_size=1,

stride=stride)

self.shortcut = shortcut

self._num_channels_out = num_filters * 4

def forward(self, inputs):

y = self.conv0(inputs)

swin = self.swin(y)

conv2 = self.conv2(swin)

# 如果shortcut=True,直接将inputs跟conv2的输出相加

# 否则需要对inputs进行一次卷积,将形状调整成跟conv2输出一致

if self.shortcut:

short = inputs

else:

short = self.short(inputs)

y = paddle.add(x=short, y=conv2)

y = F.relu(y)

return y

#搭建SwinResnet

class SwinResnet(paddle.nn.Layer):

def __init__(self, num_classes=12):

super().__init__()

depth = [3, 4, 6, 3]

# 残差块中使用到的卷积的输出通道数,图片的尺寸信息,多头注意力参数

num_filters = [64, 128, 256, 512]

resolution_list = [[(56,56),(56,56)],[(56,56),(28,28)],[(28,28),[14,14]],[(14,14),(7,7)]]

num_head_list = [4, 8, 16, 32]

# SwinResnet的第一个模块,包含1个7x7卷积,后面跟着1个最大池化层

#[3, 224, 224]

self.conv = ConvBNLayer(

num_channels=3,

num_filters=64,

filter_size=7,

stride=2,

act='relu')

#[64, 112, 112]

self.pool2d_max = nn.MaxPool2D(

kernel_size=3,

stride=2,

padding=1)

#[64, 56, 56]

# SwinResnet的第二到第五个模块c2、c3、c4、c5

self.bottleneck_block_list = []

num_channels = 64

for block in range(len(depth)):

shortcut = False

for i in range(depth[block]):

# c3、c4、c5将会在第一个残差块使用downsample=True;其余所有残差块downsample=False

bottleneck_block = self.add_sublayer(

'bb_%d_%d' % (block, i),

BottleneckBlock(

num_channels=num_channels,

num_filters=num_filters[block],

stride=2 if i == 0 and block != 0 else 1,

downsample=True if i == 0 and block != 0 else False,

num_heads=num_head_list[block],

resolution=resolution_list[block][0] if i == 0 and block != 0 else resolution_list[block][1],

window_size=7,

shortcut=shortcut))

num_channels = bottleneck_block._num_channels_out

self.bottleneck_block_list.append(bottleneck_block)

shortcut = True

# 在c5的输出特征图上使用全局池化

self.pool2d_avg = paddle.nn.AdaptiveAvgPool2D(output_size=1)

# stdv用来作为全连接层随机初始化参数的方差

import math

stdv1 = 1.0 / math.sqrt(2048 * 1.0)

stdv2 = 1.0 / math.sqrt(256 * 1.0)

# 创建全连接层,输出大小为类别数目,经过残差网络的卷积和全局池化后,

# 卷积特征的维度是[B,2048,1,1],故最后一层全连接的输入维度是2048

self.out = nn.Sequential(nn.Dropout(0.2),

nn.Linear(in_features=2048, out_features=256,

weight_attr=paddle.ParamAttr(

initializer=paddle.nn.initializer.Uniform(-stdv1, stdv1))),

nn.LayerNorm(256),

nn.Dropout(0.2),

nn.LeakyReLU(),

nn.Linear(in_features=256,out_features=num_classes,

weight_attr=paddle.ParamAttr(

initializer=paddle.nn.initializer.Uniform(-stdv2, stdv2)))

)

def forward(self, inputs):

y = self.conv(inputs)

y = self.pool2d_max(y)

for bottleneck_block in self.bottleneck_block_list:

y = bottleneck_block(y)

y = self.pool2d_avg(y)

y = paddle.reshape(y, [y.shape[0], -1])

y = self.out(y)

return yMode = 0 #修改此处即可训练三个不同的模型

import paddle

import paddle.nn as nn

from paddle.vision.models import resnet50, vgg16, LeNet

from paddle.vision.datasets import Cifar10

from paddle.optimizer import Momentum

from paddle.regularizer import L2Decay

from paddle.nn import CrossEntropyLoss

from paddle.metric import Accuracy

from paddle.vision.transforms import Transpose, Resize, Compose

from model import SwinResnet

# 确保从paddle.vision.datasets.Cifar10中加载的图像数据是np.ndarray类型

paddle.vision.set_image_backend('cv2')

# 加载模型

resnet = resnet50(pretrained=False, num_classes=10)

import math

stdv1 = 1.0 / math.sqrt(2048 * 1.0)

stdv2 = 1.0 / math.sqrt(256 * 1.0)

#修改resnet最后一层,加强模型拟合能力

resnet.fc = nn.Sequential(nn.Dropout(0.2),

nn.Linear(in_features=2048, out_features=256,

weight_attr=paddle.ParamAttr(

initializer=paddle.nn.initializer.Uniform(-stdv1, stdv1))),

nn.LayerNorm(256),

nn.Dropout(0.2),

nn.LeakyReLU(),

nn.Linear(in_features=256,out_features=10,

weight_attr=paddle.ParamAttr(

initializer=paddle.nn.initializer.Uniform(-stdv2, stdv2)))

)

model = SwinResnet(num_classes=10) if Mode == 0 else resnet

#打包模型

model = paddle.Model(model)

# 创建图像变换

transforms = Compose([Resize((224,224)), Transpose()]) if Mode != 2 else Compose([Resize((32, 32)), Transpose()])

# 使用Cifar10数据集

train_dataset = Cifar10(mode='train', transform=transforms)

valid_dadaset = Cifar10(mode='test', transform=transforms)

# 定义优化器

optimizer = Momentum(learning_rate=0.01,

momentum=0.9,

weight_decay=L2Decay(1e-4),

parameters=model.parameters())

# 进行训练前准备

model.prepare(optimizer, CrossEntropyLoss(), Accuracy(topk=(1, 5)))

# 启动训练

model.fit(train_dataset,

valid_dadaset,

epochs=40,

batch_size=80,

save_dir="./output",

num_workers=8)测试结果分析

以下res224指Resnet50输入图像尺寸为224x224,res32指Resnet50输入图像尺寸为32x32。

SwinT的应用场景

总结

我们将Swin Transformer最核心的模块制作成了SwinT接口,使用形式类似Conv2D。首先,这极大的方便了开发者们进行网络模型的编写,尤其是要自定义模型架构时,并混合使用Conv2D和SwinT;然后,我们认为SwinT接口的内容非常简单并且高效,因此这个接口短期内将不会过时,可以拥有时效性上的保证;最后,我们真实地对该接口进行了测试,证明了该接口的易用性以及精度性能。

相关推荐

关注【飞桨PaddlePaddle】公众号

获取更多技术内容~